系统说明

服务器操作系统:Centos7

开发环境:VMware workstation Pro

OpenStack版本:Rocky

节点网络规划

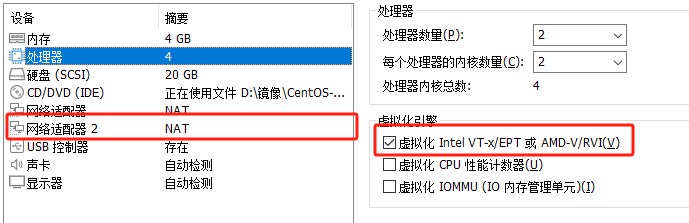

注意:每台虚拟机都要打开虚拟化技术,并且至少两张网卡。

基础配置

修改主机名(两节点都需要)

controller

hostnamectl set-hostname controllercompute

hostnamectl set-hostname compute关闭防火墙(两节点都需要)

systemctl stop firewalld

systemctl disable firewalld

setenforce 0重启

reboot配置主机解析(两节点都需要)

输入vi /etc/hosts 将下列代码添加进入

192.168.160.7 controller

192.168.160.6 compute注意:IP地址是自己虚拟机的IP地址

测试

[root@controller ~]# ping compute

[root@compute ~]# ping controller查看相互是否能够Ping通

配置yum源(两节点都需要)

mkdir ori_repo-config

mv /etc/yum.repos.d/* ./ori_repo-config/

touch /etc/yum.repos.d/CentOS-PrivateLocal.repo输入vi /etc/yum.repos.d/CentOS-PrivateLocal.repo 将下列代码添加进入

[base]

name=CentOS-$releasever - Base - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[updates]

name=CentOS-$releasever - Updates - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[extras]

name=CentOS-$releasever - Extras - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck=1

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[centosplus]

name=CentOS-$releasever - Plus - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[contrib]

name=CentOS-$releasever - Contrib - mirrors.aliyun.com

failovermethod=priority

baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/

http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/

http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/

gpgcheck=1

enabled=0

gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

[centos-openstack-stein]

name=CentOS-7 - OpenStack stein

baseurl=http://mirrors.aliyun.com/centos/7/cloud/x86_64/openstack-stein/

gpgcheck=0

enabled=1

[centos-qemu-ev]

name=CentOS-$releasever - QEMU EV

baseurl=http://mirrors.aliyun.com/centos/7/virt/x86_64/kvm-common/

gpgcheck=0

enabled=1

[centos-ceph-nautilus]

name=CentOS-7 - Ceph Nautilus

baseurl=http://mirrors.aliyun.com/centos/7/storage/x86_64/ceph-nautilus/

gpgcheck=0

enabled=1

[centos-nfs-ganesha28]

name=CentOS-7 - NFS Ganesha 2.8

baseurl=http://mirrors.aliyun.com/centos/7/storage/x86_64/nfsganesha-28/

gpgcheck=0

enabled=1清除缓存后重新添加缓存

yum clean all

yum makecache更新软件包

yum -y update安装基础软件包

安装NTP服务(两节点都需要,但配置不同)

yum install -y chronycontroller节点:

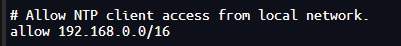

输入vi /etc/chrony.conf修改代码

allow 192.168.0.0/16 #去掉注释 指定网段访问

compute节点:

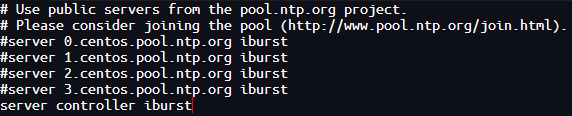

输入vi /etc/chrony.conf 修改代码

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst #都用#注释掉

server controller iburst #添加这一行

两个节点开启NTP服务

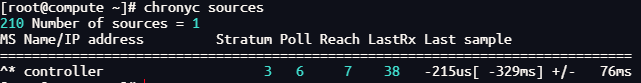

systemctl enable chronyd && systemctl restart chronyd测试(compute节点)

chronyc sources

出现以上情况,测试成功

安装openstack客户端(两节点都需要)

yum install python-openstackclient -y安装openstack-selinux(两节点都需要)

yum install openstack-selinux -y安装数据库(controller节点)

安装mariadb

yum install mariadb mariadb-server python2-PyMySQL -y配置数据库

输入 vi /etc/my.cnf.d/openstack.cnf 添加以下代码

[mysqld]

bind-address=192.168.160.7 #controller节点的IP地址

default-storage-engine=innodb

innodb_file_per_table=on

max_connections=4096

collation-server=utf8_general_ci

character-set-server=utf8注意:IP地址是自己虚拟机的IP地址

启动服务

systemctl enable mariadb && systemctl start mariadb数据库初始化(密码为root)

[root@controller ~]# mysql_secure_installation

Enter current password for root (enter for none): #回车

Set root password? [Y/n] Y

New password: root

Re-enter new password: root

Remove anonymous users? [Y/n] Y

Disallow root login remotely? [Y/n] n

Remove test database and access to it? [Y/n] Y

Reload privilege tables now? [Y/n] Y安装消息队列(controller节点)

安装rabbitmq-server

yum install rabbitmq-server -y启动服务

systemctl enable rabbitmq-server && systemctl start rabbitmq-server创建OpenStack用户并设置密码赋予权限

rabbitmqctl add_user openstack 123456

rabbitmqctl set_permissions openstack ".*" ".*" ".*"安装Memcached缓存服务(controller节点)

安装Memcached

yum install -y memcached修改配置文件

输入 vi /etc/sysconfig/memcached 将OPTIONS="-l 127.0.0.1,::1"修改为下面代码

OPTIONS="-l 127.0.0.1,::1,controller"启动服务

systemctl enable memcached && systemctl start memcached安装Keystone身份认证服务(controller节点)

创建keystone数据库及用户

mysql -uroot -proot

create database keystone;

grant all privileges on keystone.* to 'keystone'@'localhost' identified by '123456';

grant all privileges on keystone.* to 'keystone'@'%' identified by '123456';

flush privileges;

exit安装keystone包

yum install openstack-keystone httpd mod_wsgi -y配置keystone

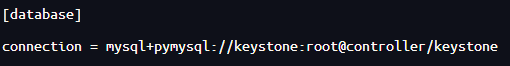

输入 vi /etc/keystone/keystone.conf 使用/+搜索字符 查找代码并修改

[database]

connection = mysql+pymysql://keystone:123456@controller/keystone

[token]

provider = fernet

同步数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone初始化Fernet Key库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone引导身份认证服务

keystone-manage bootstrap --bootstrap-password 123456 \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne输入 vi /etc/httpd/conf/httpd.conf 添加以下代码

ServerName controller创建签名秘钥和认证

输入 vi /etc/httpd/conf.d/wsgi-keystone.conf 添加以下代码

Listen 5000

Listen 35357

<VirtualHost *:5000>

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

LimitRequestBody 114688

<IfVersion >= 2.4>

ErrorLogFormat "%{cu}t %M"

</IfVersion>

ErrorLog /var/log/httpd/keystone.log

CustomLog /var/log/httpd/keystone_access.log combined

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

</VirtualHost>

<VirtualHost *:35357>

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

LimitRequestBody 114688

<IfVersion >= 2.4>

ErrorLogFormat "%{cu}t %M"

</IfVersion>

ErrorLog /var/log/httpd/keystone.log

CustomLog /var/log/httpd/keystone_access.log combined

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

</VirtualHost>

Alias /identity /usr/bin/keystone-wsgi-public

<Location /identity>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup keystone-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>

Alias /identity_admin /usr/bin/keystone-wsgi-admin

<Location /identity_admin>

SetHandler wsgi-script

Options +ExecCGI

WSGIProcessGroup keystone-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

</Location>启动http服务

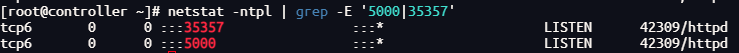

systemctl enable httpd && systemctl start httpd监测端口是否开启

yum install net-tools -y

netstat -ntpl | grep -E '5000|35357'

配置环境变量

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3创建项目和用户

openstack domain create --description "An Example Domain" example

openstack project create --domain default --description "Service Project" service

openstack project create --domain default --description "Demo Project" demo

openstack user create --domain default --password 123456 demo

openstack role create user

openstack role add --project demo --user demo userkeystone配置校验(密码:123456)

unset OS_AUTH_URL OS_PASSWORD

openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issue

openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name demo --os-username demo token issue创建环境变量文件

输入 vi admin-openrc 添加以下代码

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2输入 vi demo-openrc 添加以下代码

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2验证环境变量有效性

source admin-openrc

openstack token issue安装Glance镜像服务(controller节点)

glance数据库配置

创建Glance数据库及用户

mysql -uroot -proot

create database glance;

grant all privileges on glance.* to 'glance'@'localhost' identified by '123456';

grant all privileges on glance.* to 'glance'@'%' identified by '123456';

exit

source admin-openrc

openstack user create --domain default glance --password 123456将admin角色添加到glance用户和项目中

openstack role add --project service --user glance admin创建Glance服务实体

openstack service create --name glance --description "Openstack Image" image创建Glance服务认证端点

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292安装Glance包

yum install openstack-glance -y配置glance-api文件

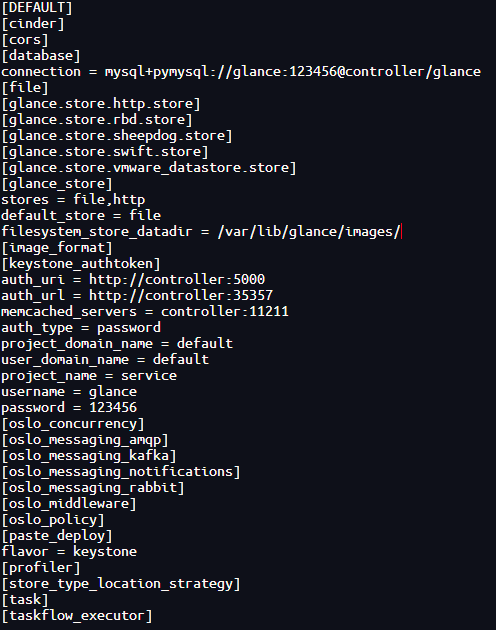

cp /etc/glance/glance-api.conf{,.bak}

grep -Ev '^$|#' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf输入vi /etc/glance/glance-api.conf 修改代码

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

配置glance-registry文件

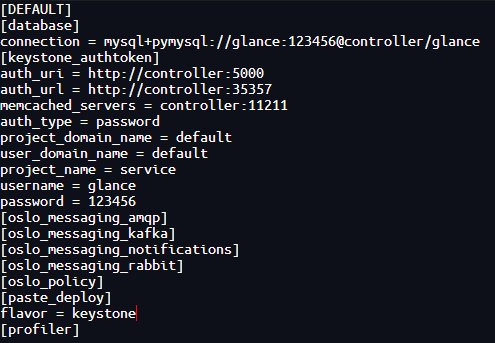

cp /etc/glance/glance-registry.conf{,.bak}

grep -Ev '^$|#' /etc/glance/glance-registry.conf.bak > /etc/glance/glance-registry.conf输入vi /etc/glance/glance-registry.conf 修改代码

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

同步镜像服务数据库

su -s /bin/sh -c "glance-manage db_sync" glance启动glance服务

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service检验glance服务

yum -y install wget

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

glance image-create --name "cirros" --disk-format qcow2 --container-format bare --progress < cirros-0.4.0-x86_64-disk.img

openstack image list安装nova计算服务(controller节点)

创建数据库及用户

mysql -uroot -proot

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '123456';

exit创建nova用户

source admin-openrc

openstack user create --domain default --password 123456 nova将nova用户添加admin角色

openstack role add --project service --user nova admin创建nova服务实体

openstack service create --name nova --description "OpenStack Compute" compute创建nova服务认证端点

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1创建placement服务用户

openstack user create --domain default --password 123456 placement将placement用户为项目服务admin角色

openstack role add --project service --user placement admin创建placement服务实体

openstack service create --name placement --description "Placement API" placement创建placement服务认证端点

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:8778安装配置nova

安装软件包

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api -y配置nova文件

cp /etc/nova/nova.conf{,.bak}

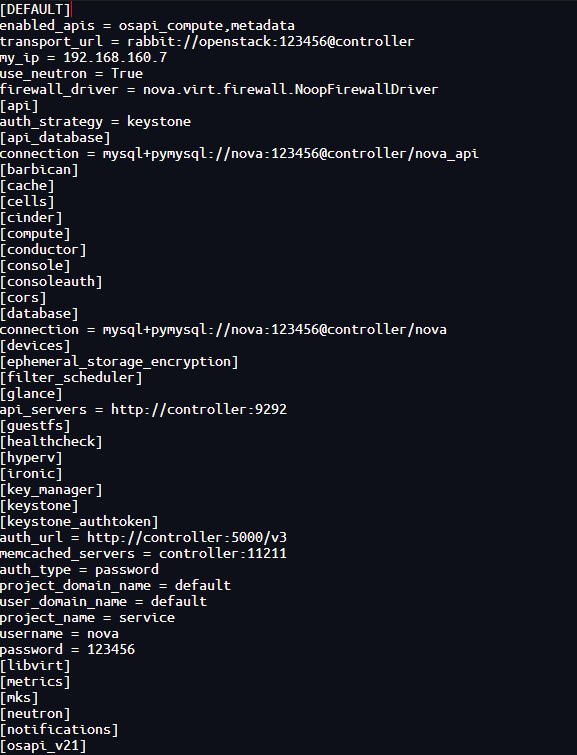

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf输入vi /etc/nova/nova.conf 修改代码

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

my_ip = 192.168.160.7

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

connection = mysql+pymysql://nova:123456@controller/nova_api

[database]

connection = mysql+pymysql://nova:123456@controller/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456

输入vi /etc/httpd/conf.d/00-nova-placement-api.conf 添加下列代码

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova验证 nova、 cell0、 cell1数据库是否注册正确

nova-manage cell_v2 list_cells启动服务

systemctl start openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl enable openstack-nova-api.service openstack-nova-consoleauth openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service安装nova计算服务(compute节点)

安装配置计算节点

安装软件包

yum install openstack-nova-compute -y配置nova文件

cp /etc/nova/nova.conf{,.bak}

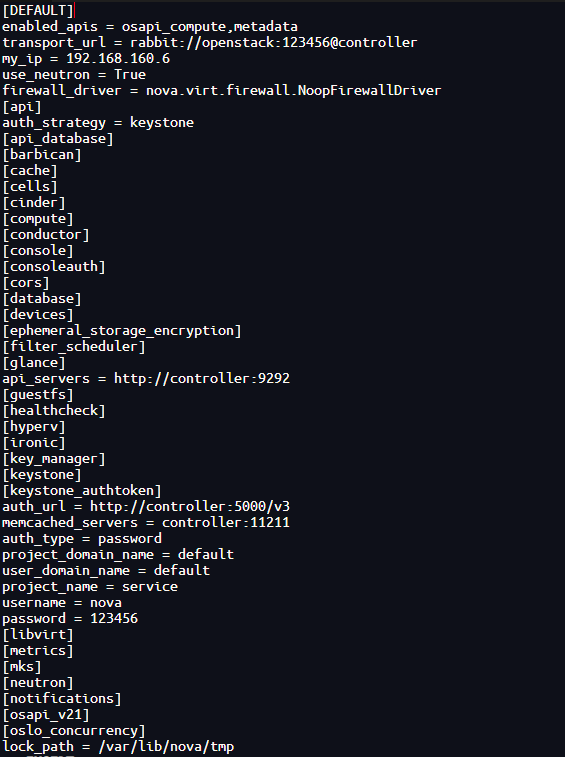

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf输入vi /etc/nova/nova.conf 修改代码

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

my_ip = 192.168.160.6

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456

启动服务

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service检验nova服务

以下操作在controller节点执行

在controller节点上确认计算节点

openstack compute service list --service nova-compute添加计算节点到cell数据库

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova配置自动发现新计算节点

输入vi /etc/nova/nova.conf 修改代码

[scheduler]

discover_hosts_in_cells_interval = 300检验nova服务

openstack compute service list安装Neutron服务(controller节点)

网络服务neutron安装及配置

创建数据库及用户

mysql -uroot -proot

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '123456';

exit创建neutron用户

openstack user create --domain default --password 123456 neutron添加admin角色到neutron用户

openstack role add --project service --user neutron admin创建neutron服务实体

openstack service create --name neutron --description "OpenStack Networking" network创建网络服务API端点

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:9696配置Self-service networks

安装组件

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y配置服务组件

cp /etc/neutron/neutron.conf{,.bak}

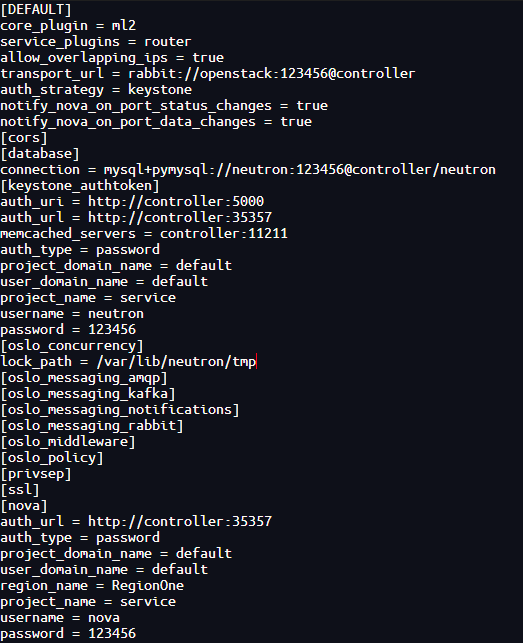

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf输入vi /etc/neutron/neutron.conf 修改代码

[database]

connection = mysql+pymysql://neutron:123456@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:123456@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置网络二层插件

cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep -Ev '$^|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini输入vi /etc/neutron/plugins/ml2/ml2_conf.ini 添加以下代码

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vlan]

network_vlan_ranges = provider:1001:2000

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true配置linux网桥代理

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini输入vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini 添加以下代码

[linux_bridge]

physical_interface_mappings = provider:ens33

[vxlan]

enable_vxlan = true

local_ip = 192.168.160.7

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver配置内核支持网桥过滤

输入vi /usr/lib/sysctl.d/00-system.conf 添加以下代码

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1sysctl -p配置三层代理

cp /etc/neutron/l3_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini输入vi /etc/neutron/l3_agent.ini 添加以下代码

[DEFAULT]

interface_driver = linuxbridge配置DHCP agent

cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini输入vi /etc/neutron/dhcp_agent.ini 添加以下代码

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true配置metadata

输入vi /etc/neutron/metadata_agent.ini 添加以下代码

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = 123456输入vi /etc/nova/nova.conf 添加以下代码

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = true

metadata_proxy_shared_secret = 123456

启动服务

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl enable neutron-l3-agent.service && systemctl start neutron-l3-agent.service安装Neutron服务(compute节点)

网络服务neutron安装及配置

安装组件

yum install openstack-neutron-linuxbridge ebtables ipset -y配置公共组件

cp /etc/neutron/neutron.conf{,.bak}

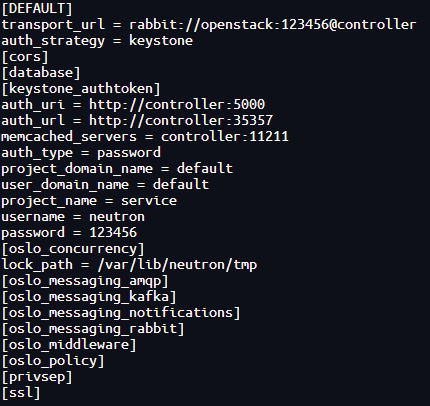

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf输入vi /etc/neutron/neutron.conf 添加以下代码

[DEFAULT]

transport_url = rabbit://openstack:123456@controller

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置网络

配置linux网桥

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak > /etc/neutron/plugins/ml2/linuxbridge_agent.ini输入vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini 添加以下代码

[vxlan]

enable_vxlan = true

local_ip = 192.168.160.6

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver配置计算服务使用网络服务

输入vi /etc/nova/nova.conf 添加以下代码

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456配置内核支持网桥过滤

输入vi /usr/lib/sysctl.d/00-system.conf 修改为以下代码

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1sysctl -p重启compute服务

systemctl restart openstack-nova-compute.service启动服务

systemctl enable neutron-linuxbridge-agent.service && systemctl start neutron-linuxbridge-agent.service验证(controller节点)

openstack network agent list安装Dashboard面板服务(controller节点)

控制面板Dashboard安装及配置

安装软件包

yum install openstack-dashboard -y配置dashboard

输入vi /etc/openstack-dashboard/local_settings 添加以下代码

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = 'Default'

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

TIME_ZONE = "Asia/Shanghai"输入vi /etc/httpd/conf.d/openstack-dashboard.conf 添加以下代码

WSGIApplicationGroup %{GLOBAL}启动服务

systemctl restart httpd.service memcached.service访问web验证

浏览器输入http://controller节点的IP/dashboard访问openstack界面

域:default

用户名:admin

密码:123456创建云主机实例

创建网络类型

source admin-openrc

openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

openstack network list

openstack subnet create --network provider --allocation-pool start=192.168.160.80,end=192.168.160.90 --dns-nameserver 114.114.114.114 --gateway 192.168.160.2 --subnet-range 192.168.160.0/24 provider

openstack subnet list

source demo-openrc

openstack network create selfservice1

openstack subnet create --network selfservice1 --dns-nameserver 114.114.114.114 --gateway 172.16.1.1 --subnet-range 172.16.1.0/24 selfservice1-net1

source demo-openrc

openstack router create router

openstack router list

neutron router-interface-add router selfservice1-net1

neutron router-gateway-set router provider

source admin-openrc

ip netns

neutron router-port-list router创建实例类型

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

openstack flavor create --id 1 --vcpus 1 --ram 1024 --disk 10 m2.nano创建密钥类型

source demo-openrc

ssh-keygen -q -N ""

Enter file in which to save the key (/root/.ssh/id_rsa): #Enter默认

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey创建安全组类型

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

openstack security group list

openstack security group rule list完成!撒花ヾ(*´∀ ˋ*)ノ